Comparison of ChatGPT and Gemini AI in Answering Higher-Order Thinking Skill Biology Questions: Accuracy and Evaluation

Main Article Content

Abstract

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

Asrafil., Retnawati, h., & Retnowati, E. (2020). The Difficult of Students when Solving HOTS Problem and the Description of Students Cognitive Load After Given Worked Example as a Feedback. International Conference on Science Education and Technology. 1511(1). 1-11.

Bond, T. G., & Fox, C. M. (2013). Applying the Rasch model: Fundamental measurement in the human sciences. Psychology Press.

Carla, M. M., et al. (2024). Large Language Models as Assistance for Glaucoma Surgical Cases: a ChatGpt VS Google Gemini Comparison. Graefe's Archive for Clinical and Experimental Ophthalmology, 262(9), 2945-2959.

Chen, L., Chen, P., & Lin, Z. (2020). Artificial Intelligence in Education: A Review. Ieee Access. 8, 75264-75278.

Dalalah, D., & Dalalah, O. M. (2023). The false positives and false negatives of generative AI detection tools in education and academic research: The case of ChatGPT. The International Journal of Management Education, 21(2), 100822.

Danry, V., et al. (2023). Don’t Just Tell Me, Ask Me: AI Systems that Intelligently Frame Explanations as Questions Improve Human Logical Discernment Accuracy Over Causal AI Explanations. Proceedings of the 2023 CHI Convergence on Human Factors in Computing Systems. 23(352), 1-13.

Dilekli, Y., & Boyraz, S. (2024). From “Can AI think” to “Can AI help thinking deeper?”: Is use of Chat GPT in higher education a tool of transformation or fraud?. International Journal of Modern Education Studies. 8(1), 49-71.

Firdaus, T. (2022). Penerapan Model Direct Instruction Berbasis Sets Pada Pembelajaran Ipa Untuk Meningkatkan Keterampilan Berpikir Kritis Siswa. Natural Science Education Research 5(1), 119-134. https://doi.org/10.21107/nser.v5i1.15759

Firdaus, T., Ahied, M., Qomaria, N., Putera, D. B. R. A., & Sutarja, M. C. (2022). Jurnal Pembelajaran Sains. Jurnal Pembelajaran Sains, 6(1), 15-23.

Firdaus, T. (2023). Representative platform cyber metaverse terkoneksi BYOD sebagai upaya preventive urgensi digital pada sistem pendidikan Indonesia. Jurnal Integrasi dan Harmoni Inovatif Ilmu-Ilmu Sosial, 3(2), 123-131. https://doi.org/10.17977/um063v3i2p123-131

Joseph, G. V., et al. (2024). Impact of Digital Literacy, Use of AI tools and Peer Collaboration on AI Assisted Learning: Perceptions of the University Students. Digital Education Review, 45, 43-49.

Karataş, F., Abedi, F. Y., Ozek Gunyel, F., Karadeniz, D., & Kuzgun, Y. (2024). Incorporating AI in foreign language education: An investigation into ChatGPT’s effect on foreign language learners. Education and Information Technologies, 29, 19343–19366.

Latifah, A., & Maryani, I. (2021). Developing HOTS Questions for the Materials of Human and Animals Respiratory Organs for Grade V of Elementary School. Jurnal Prima Edukasia, 9(2), 179-192.

Muchlis & Maulida, R. (2024). Komparasi Respons ChatGPT dan Gemini terhadap Command Pattern Identik dengan Metode Black Box. Jurnal Teknik Informatika STMIK Antar Bangsa, 10(2). 68-71. https://ejournal.antarbangsa.ac.id

Nirwani, N., Priyanto. (2024). Integrasi Artifical Intelegence dalam Pembelajaran Bahasa di SMP. Jurnal Pendidikan Bahasa dan Sastra, 7(1), 31-38.

Obaigbena, A., et al. (2024). AI and Human-Robot Interaction: A Riview of Recent Advances and Challenges. GSC Advanced Research and Reviews. 18(02). 321-330.

Owan, V. J., et al. (2023). Exploring the Potential of Artificial Intellegence Tools in Educational Measurement and Assessment. Journal of Mathematics, Science and Technology Education, 19(8), 1-15.

Rane, N. L., Choudhary, S. P., & Rane, J. (2024). Gemini or ChatGPT? Efficiency, Performance, and Adaptability of Cutting-Edge Generative Artificial Intelligence (AI) in Finance and Accounting. 1-12.

Rane, N. R., Choudhary, S.P., Rane, J. (2024). Gemini versus ChatGPT: applications, performance, architecture, capabilities, and implementation. Journal of Applied Artificial Intelligence, 5(1), 69-93. https://doi.org/10.48185/jaai.v5i1.1052

Sugiyono. (2012). Metode Penelitian Kuantitatif, Kualitatif, dan R&D. Bandung: Alfabeta.

Wang, Y., Shen, S., & Lim, B. Y. (2023, April). Reprompt: Automatic prompt editing to refine ai-generative art towards precise expressions. Proceedings of the 2023 CHI conference on human factors in computing systems (pp. 1-29). https://doi.org/10.1145/3544548.3581402

World Bank. (2024). Who on Earth Is Using Generative AI?

Yasmar, R., & Amalia, D. R. (2024). ANALISIS SWOT PENGGUNAAN CHAT GPT DALAM DUNIA PENDIDIKAN ISLAM. Fitrah: Jurnal Studi Pendidikan, 15(1), 43-64. https://doi.org/10.47625/fitrah.v15i1.668

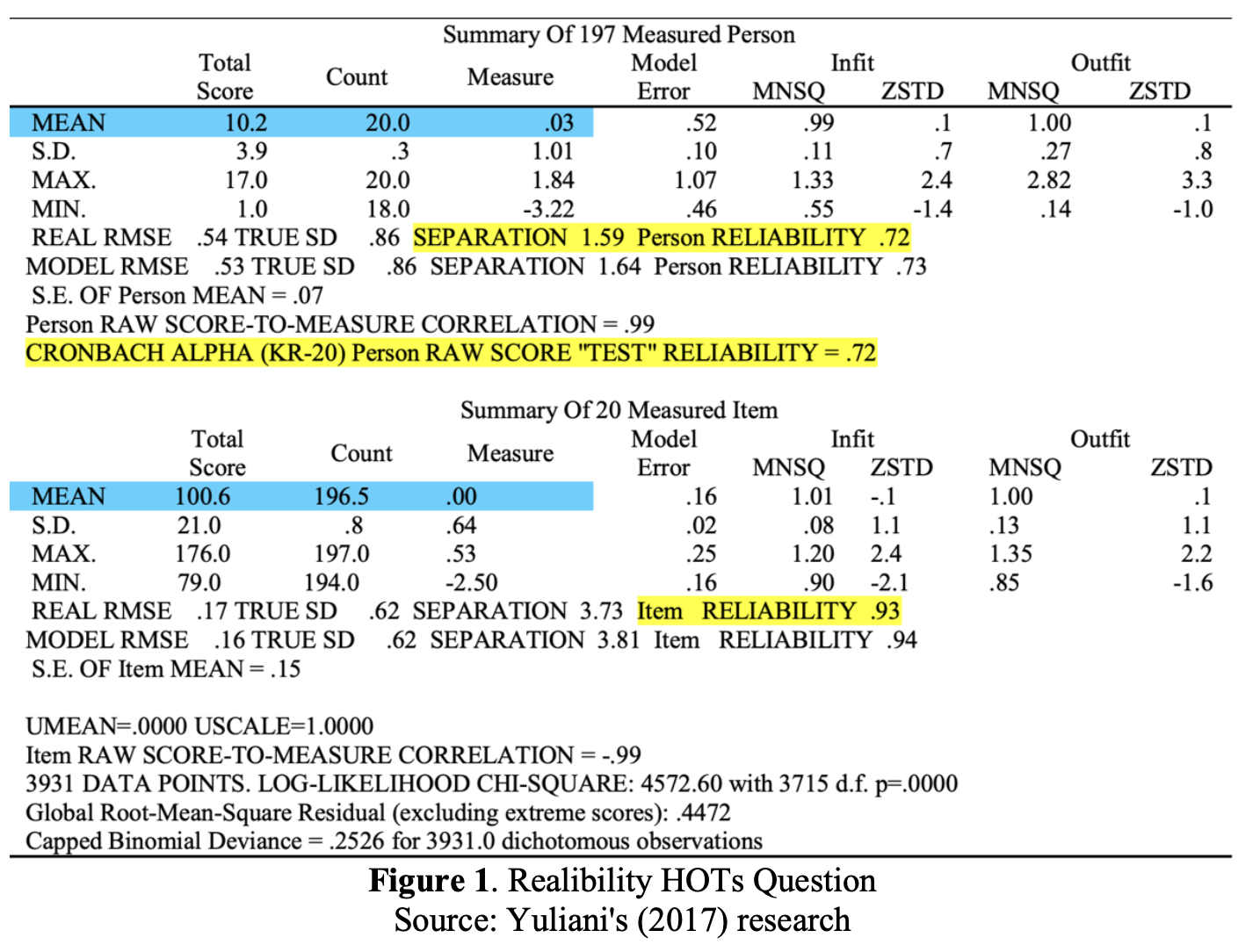

Yuliani, E. (2017). Pengembangan Manual Test berbasis Higher Order Thinking Skill (HOTs) Serta Implementasinya di SMA Unggul Negeri 8 Palembang. (Disestation, Universitas Islam Negeri (UIN) Raden Fatah Palembang).